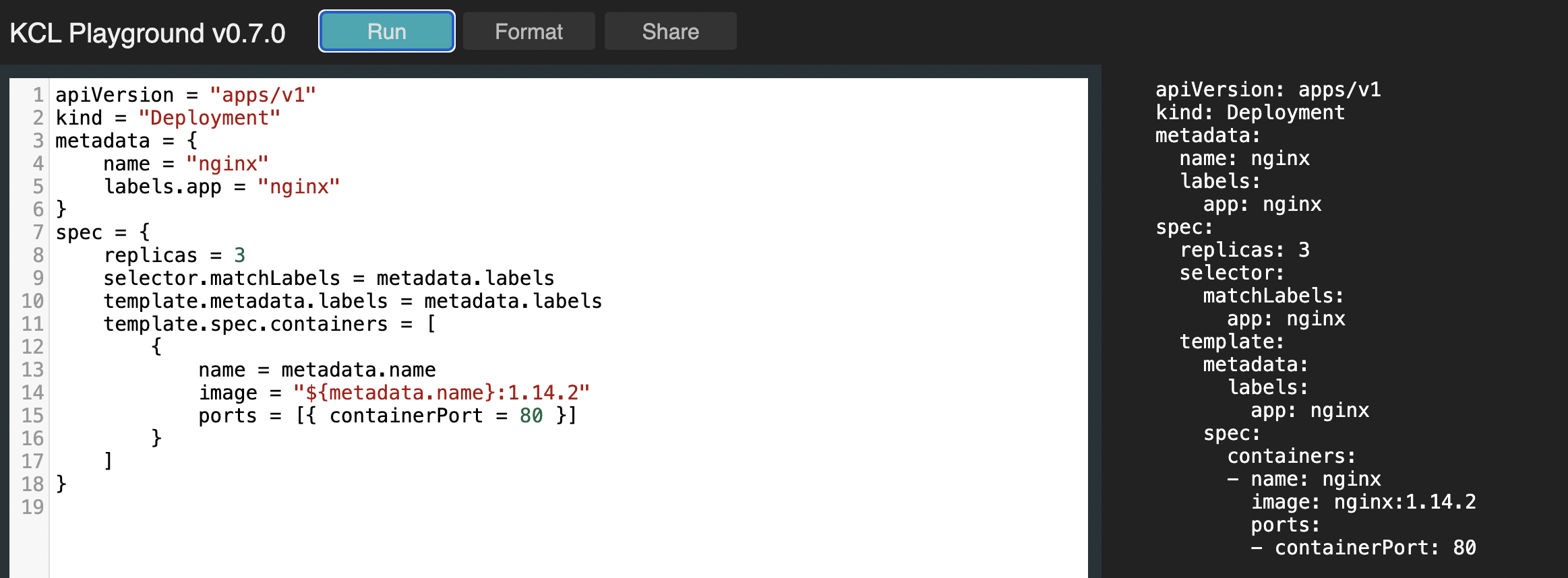

What is KCL

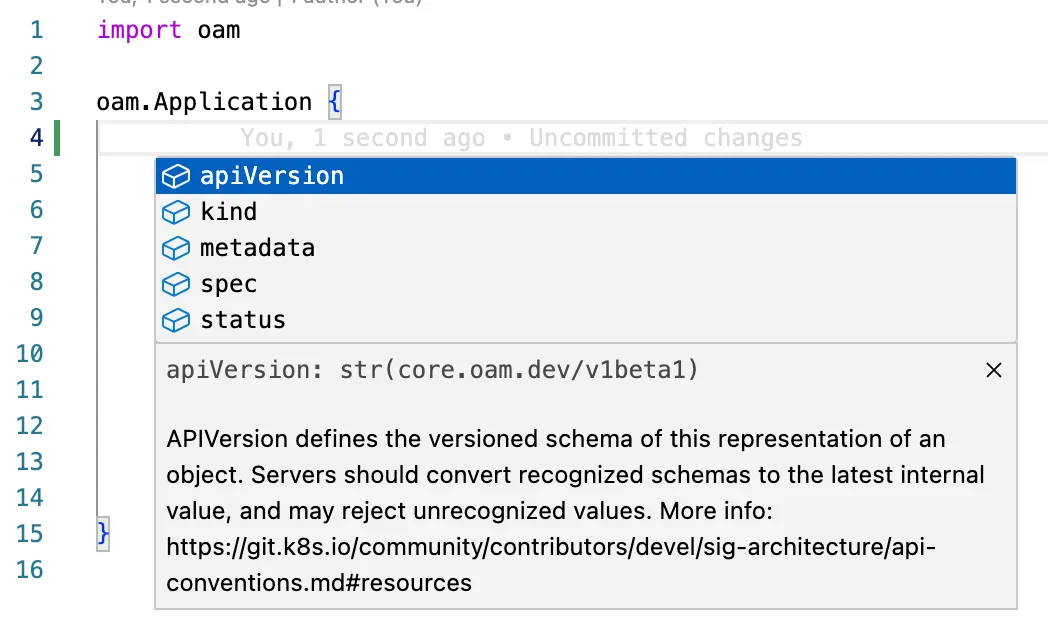

KCL is an open-source, constraint-based record and functional language that enhances the writing of complex configurations, including those for cloud-native scenarios. It is hosted by the Cloud Native Computing Foundation (CNCF) as a Sandbox Project. With advanced programming language technology and practices, KCL is dedicated to promoting better modularity, scalability, and stability for configurations. It enables simpler logic writing and offers ease of automation APIs and integration with homegrown systems.

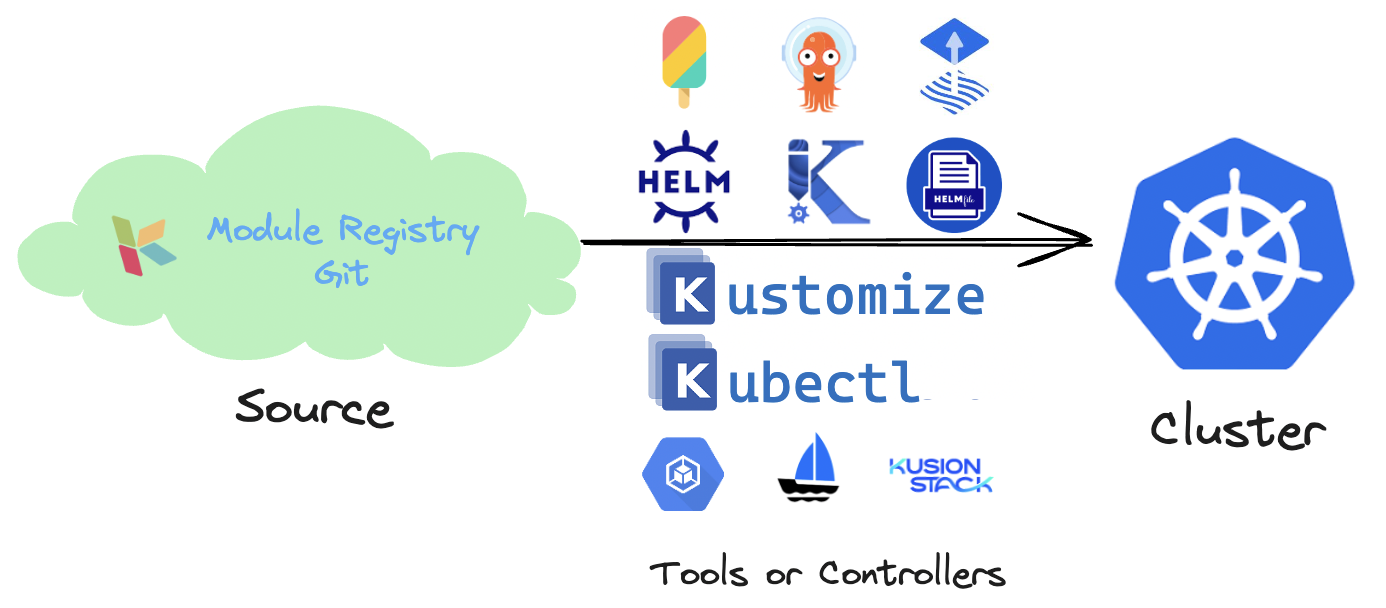

Several Ways to Deploy KCL Configuration to a cluster

Since KCL can output YAML/JSON files, theoretically, any method that supports deploying YAML/JSON configurations to a cluster can be used to deploy KCL configurations. Usually, KCL files are stored in Git or Module Registry for easy sharing among different roles and teams. However, KCL can do much more than that, and the main ways to deploy KCL configurations to a cluster are as follows.

- Using kubectl: The most basic way to access a Kubernetes cluster is using Kubectl. We can directly deploy the Kubernetes YAML configuration files generated by KCL to the cluster using the kubectl apply command. This method is simple and suitable for deploying a small number of resources.

- Using CI/CD tools: CI/CD tools (such as Jenkins, GitLab CI, CircleCI, ArgoCD, FluxCD, etc.) can be used to achieve GitOps automation deployment of Kubernetes YAML configuration files to the cluster. By defining CI/CD processes and configuration files, automated building and deployment to the cluster can be achieved.

- Using tools that support KRM Function specification: Kubernetes Resource Model (KRM) Function allows users to use other languages, including KCL, to enhance YAML template and logic writing capabilities, such as writing conditions, loops, etc. These tools mainly include Kustomize, KPT, Crossplane, etc. Although Helm does not natively support KRM Function, we can combine Helm and Kustomize to achieve it.

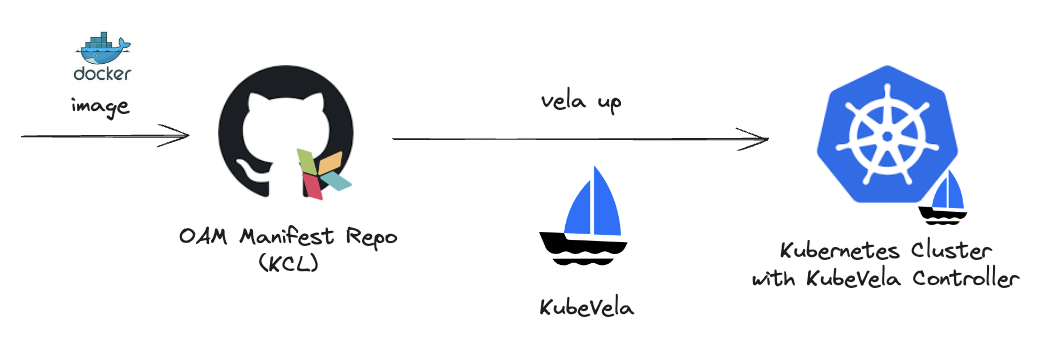

- Using client/runtime custom abstract configuration tools for deployment: KusionStack, KubeVela, etc. Of course, KCL allows you to customize your preferred application configuration model.

- Using KCL Operator with Kubernetes Mutation Webhook and Validation Webhook support for runtime configuration or policy writing.

- Using configuration management tools: Combine configuration management tools (such as Puppet, Chef, Ansible, etc.) to automate the deployment of Kubernetes YAML configurations to the cluster. These tools can achieve dynamic configuration deployment by defining KCL templates and variables.

The reasons for KCL supporting multiple deployment methods and cloud-native tool integration are as follows:

- Flexibility: Different deployment methods are suitable for different scenarios and needs, so providing multiple choices allows users to choose the most suitable way to deploy applications or configurations according to their specific situations.

- Cloud-native tool ecosystem: Kubernetes is a widely used platform with a large ecosystem of tools and technologies. Supporting multiple deployment methods can provide users with more choices to meet their usage habits and technological preferences.

- Specifications and standards: The Kubernetes community is working to promote standards and specifications, such as OAM, KRM Function specifications, and Helm Charts. By providing multiple support methods through a unified KRM KCL specification and KCL Module, different specification and standard requirements can be met.

- Automation and integration: Some deployment methods can be integrated through automation tools and CI/CD pipelines to achieve automated deployment processes. Therefore, providing multiple ways can meet different automation and integration needs.

In conclusion, supporting multiple deployment methods can provide users with greater flexibility and choice, allowing them to deploy applications or configurations according to their needs and preferences. The specific usage of each deployment method is as follows:

Using Kubectl

https://kcl-lang.io/blog/2023-11-20-search-k8s-module-on-artifacthub

Using CI/CD Tools

https://kcl-lang.io/blog/2023-07-31-kcl-github-argocd-gitops

Using KRM Function

https://kcl-lang.io/blog/2023-10-23-cloud-native-supply-chain-krm-kcl-spec

Using Custom Abstract Configuration Tools

https://kcl-lang.io/blog/2023-12-15-kubevela-integration

Using KCL Operator

https://kcl-lang.io/docs/user_docs/guides/working-with-k8s/mutate-manifests/kcl-operator